Outperforming Rayon with OpenMP

Replacing Rayon with OpenMP for additional gains

Nov 16, 2021

rust

c

perf

openmp

kzg-proofs

bls12-381

CONTENTS

Introduction

For the Blockchain Technologies course, students were paired into groups and assigned to produce the fastest Rust library implementing the KZG10 cryptographic scheme. Two teams used the

Choosing the right tool for the job

It’s a no-brainer for Rust programmers to choose Rayon when it comes to writing parallel code, as there aren’t many other viable and easy-to-use options available. While Rust does offer alternatives like std::thread, which provides access to native OS threads, the manual creation and management of threads can be cumbersome.

When I was working on my C code, I had to decide on the best approach to parallelize it. My options included:

pthread: A POSIX standard for thread creation and management.- A popular third-party threadpool library from GitHub.

OpenMP: Parallel programming library for C and C++ without manual thread management.

I chose OpenMP because, during experimentation, I discovered it yielded the best results and was relatively straightforward to use. However, I encountered a challenge in integrating it with Rust to ensure compatibility across multiple platforms, starting with Linux and possibly macOS. Eventually, I came up with the following Bash script to automate the entire process of building and packaging shared libraries. Fortunately, OpenMP was integrated into Rust by either:

- exporting the

RUSTFLAGSenvironment variable pointing to the correctlibompLLVM runtime

1# Linux

2apt install libomp-dev

3export LIBOMP_PATH=$(find /usr/lib/llvm* -name libiomp5.so | head -n 1)

4

5# MacOS

6brew install libomp

7ln -s /usr/local/opt/libomp/lib/libomp.dylib /usr/local/lib

8ln -s /usr/local/opt/libomp/include/omp.h /usr/local/include

9export LIBOMP_PATH=/usr/local/lib/libomp.dylib

10

11# And finally

12export RUSTFLAGS="-C link-arg=$LIBOMP_PATH"

- or creating a

.cargo/config.tomlfile inside the project directory and mentioning it there

1[build]

2rustflags = [

3 "-C", "link-arg=LIBOMP_PATH"

4]

Well, that was simple.

Searching for bottlenecks

In order to optimize a program’s performance, CPU profiling tools like Perf play a crucial role by providing detailed insights into where computational resources are being used. One powerful visualization tool generated by these profilers is the flamegraph, which offers a clear representation of a program’s CPU usage over time.

The flamegraph displayed above illustrates the CPU time distribution of the c-kzg library’s fft_g1 benchmark. Upon analysis, it became evident that a significant portion of the execution time was spent in assembly code, highlighting potential areas for optimization. Further investigation on fft_g1 benchmark was indeed a performance bottleneck and stood out as a prime candidate for parallelization. By parallelizing this specific operation, we can improving the overall performance of the library.

Parallelizing fft_g1

The fft_g1 function calls the fft_g1_fast function, which applies the divide-and-conquer principle to divide a large problem into smaller subproblems, recursively solving each of them. The general procedure here is to distribute work (fft_f1_fasts) among worker threads.

The blst-from-scratch team implemented it as follows:

1let (lo, hi) = ret.split_at_mut(half);

2rayon::join(

3 || fft_g1_fast(lo, data, stride * 2, roots, roots_stride * 2),

4 || fft_g1_fast(hi, &data[stride..], stride * 2, roots, roots_stride * 2)

5);

6

7for i in 0..half {

8 let y_times_root = ret[i + half].mul(&roots[i * roots_stride]);

9 ret[i + half] = ret[i].sub(&y_times_root);

10 ret[i] = ret[i].add_or_dbl(&y_times_root);

11}

As a side note, rayon::join spawns two threads, one executing each of the two closures.

The C equivalent, on the other hand, was as follows:

1#pragma omp parallel sections

2{

3 #pragma omp section

4 {

5 fft_g1_fast(out, in, stride * 2, roots, roots_stride * 2, half);

6 }

7 #pragma omp section

8 {

9 fft_g1_fast(out + half, in + stride, stride * 2, roots, roots_stride * 2, half);

10 }

11}

12#pragma omp parallel

13#pragma omp for

14for (uint64_t i = 0; i < half; i++) {

15 g1_t y_times_root;

16 g1_mul(&y_times_root, &out[i + half], &roots[i * roots_stride]);

17 g1_sub(&out[i + half], &out[i], &y_times_root);

18 g1_add_or_dbl(&out[i], &out[i], &y_times_root);

19}

In addition to parallel sections, I also used OpenMP’s parallel for-loop, because I noticed it yielded a 5% greater performance on my personal machine. Considering the ubuntu-latest runner in GitHub Actions CI had only two available cores, the halves of the problem were shared among two threads where each ran the for-loop to do arithmetic operations on polynomial G1 points.

In the above code snippets, fft_g1 calls fft_g1_fast, which up to scale 16 should at most 1 << 15 times call itself recursively, where each such call will be distributed among the 2 threads. Since we’re computing fft_g1 up to scale 8, there should be (1 << 7) + 1 tasks (not to be confused by OpenMP’s task pragma directive!) for fft_g1_fast or 129 such tasks that will be run in parallel!

Local c-kzg benchmark

Running on my personal computer with i5-7300HQ (4 threads overclocked at 3.50GHz), all mitigations turned off, and a custom Liquorix kernel, I was able to achieve the following results:

| Original c-kzg library | Parallelized c-kzg library |

|---|---|

| |

That’s twice as fast with as little effort as putting in a few pragmas!

GitHub Actions CI benchmarks

The fft_g1 benchmark was limited to scale 7 because the overall run time for the job exceeds the 6 hour limit if I were to benchmark it up to scale 16, as Criterion runs each iteration a couple of hundred times to produce more accurate results, and that used to automatically cancel other running CI jobs as jobs submitted to GitHub Actions are limited to 360 minutes.

Benchmarking blst-from-scratch

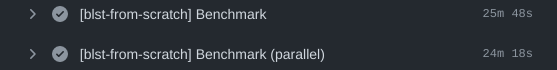

From the above screenshot we can see that the parallelized version of the library ran 1m 28s shorter than its sequential version, and below are the results of sequential fft_g1 algorithm:

1Benchmarking bench_fft_g1 scale: '7'

2Benchmarking bench_fft_g1 scale: '7': Warming up for 3.0000 s

3Benchmarking bench_fft_g1 scale: '7': Collecting 100 samples in estimated 6.6364 s (200 iterations)

4Benchmarking bench_fft_g1 scale: '7': Analyzing

5bench_fft_g1 scale: '7' time: [33.423 ms 33.785 ms 34.150 ms]

of which the average run time for scale 7 was cut down by 38.926% by its parallel counterpart:

1Benchmarking bench_fft_g1 scale: '7'

2Benchmarking bench_fft_g1 scale: '7': Warming up for 3.0000 s

3Benchmarking bench_fft_g1 scale: '7': Collecting 100 samples in estimated 6.3282 s (300 iterations)

4Benchmarking bench_fft_g1 scale: '7': Analyzing

5bench_fft_g1 scale: '7' time: [20.432 ms 20.634 ms 20.843 ms]

6 change: [-39.822% -38.926% -38.001%] (p = 0.00 < 0.05)

7 Performance has improved.

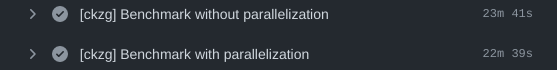

Benchmarking ckzg

The sequential version of the ckzg library ran 2m 7s faster than the same version of blst-from-scratch because it had other benchmarks that performed faster, though the parallelized version ran 1m 2s faster than its sequential version. Below are the results of the sequantial fft_g1 algorithm:

1Benchmarking bench_fft_g1 scale: '7'

2Benchmarking bench_fft_g1 scale: '7': Warming up for 3.0000 s

3Benchmarking bench_fft_g1 scale: '7': Collecting 100 samples in estimated 6.8313 s (200 iterations)

4Benchmarking bench_fft_g1 scale: '7': Analyzing

5bench_fft_g1 scale: '7' time: [32.194 ms 32.471 ms 32.760 ms]

Yet the parallel version of the fft_g1 algorithm performed much faster than it did for blst-from-scratch, even though both unparallelized versions for both teams performed evenly:

1Benchmarking bench_fft_g1 scale: '7'

2Benchmarking bench_fft_g1 scale: '7': Warming up for 3.0000 s

3Benchmarking bench_fft_g1 scale: '7': Collecting 100 samples in estimated 5.0701 s (300 iterations)

4Benchmarking bench_fft_g1 scale: '7': Analyzing

5bench_fft_g1 scale: '7' time: [16.854 ms 17.107 ms 17.439 ms]

6 change: [-48.216% -47.318% -46.306%] (p = 0.00 < 0.05)

7 Performance has improved.

Summary

- OpenMP lets you quickly prototype what is possible to parallelize with the help of CPU profiling tools like Perf

- Criterion is actually a really nice benchmarking tool to measure performance, especially when integrated into CI